In the fast-evolving world of online streaming, OTT app data scraping has become crucial as Over-The-Top (OTT) platforms gain prominence for content consumption. With the rapid expansion of these platforms, the demand for practical solutions to scrape OTT app data is increasing. This comprehensive review delves into various tools and libraries for OTT app data scraping, highlighting their features, benefits, and best practices. By leveraging these advanced solutions, users can efficiently gather and analyze data from different OTT services, including streaming media apps. Understanding how to utilize streaming app data scraping will enable you to stay competitive and informed in the dynamic landscape of streaming media.

Introduction to OTT App Scraping

OTT apps offer a wide range of content, including movies, TV shows, and live streaming services. Scraping data from these platforms can be valuable for various purposes, such as content analysis, competitive intelligence, and market research. However,scraping OTT apps comes with challenges, including dealing with dynamic content, circumventing anti-scraping measures, and ensuring compliance with legal and ethical standards.

Critical Considerations for OTT App Scraping

Before diving into specific tools and libraries, it's essential to understand the critical considerations for OTT app scraping:

1. Dynamic Content: OTT apps often use JavaScript to load content dynamically, which requires scraping tools capable of executing JavaScript and rendering web pages.

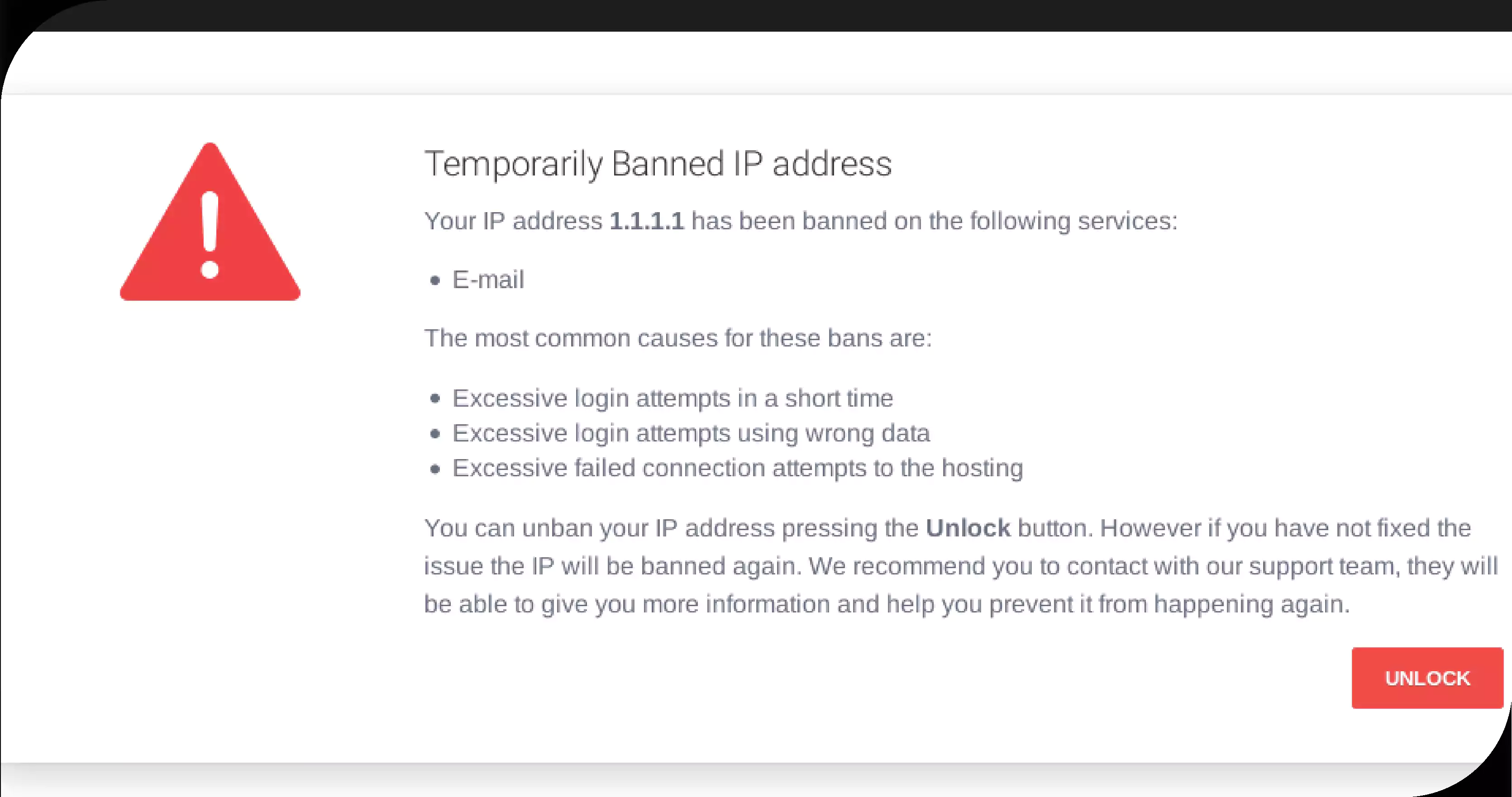

2. Anti-Scraping Measures: Many OTT platforms implement measures to prevent scraping, such as CAPTCHA challenges, IP blocking, and rate limiting.

3. Data Formats: Data on OTT platforms may be presented in various formats, including HTML, JSON, and XML. Scraping tools can effectively handle these formats.

4. Legal and Ethical Issues: Ensure that scraping activities adheres with legal requirements and respect the terms of service of the platforms.

Tools and Libraries for OTT App Scraping

1. Web Scraping Libraries

BeautifulSoup

BeautifulSoup is a widely used Python library for parsing HTML and XML documents. It provides a simple API for navigating and searching the parse tree. Although BeautifulSoup alone does not handle JavaScript rendering, it is highly effective when combined with other libraries that manage dynamic content.

• Key Features:

• Easy to use and integrate with other libraries.

• Excellent for parsing and extracting data from static HTML content.

• Supports multiple parsers, including lxml and html.parser.

• Best Use Case:

• It is ideal for scraping static pages or using tools that handle JavaScript.

Scrapy

Scrapy is an open-source web scraping framework written in Python. It is designed for large-scale web scraping and has built-in support for handling requests, parsing responses, and managing data pipelines.

• Key Features:

• Powerful and flexible framework for large-scale scraping projects.

• Built-in support for handling requests, managing cookies, and handling retries.

• Extensible with middlewares and custom pipelines.

• Best Use Case:

• Suitable for comprehensive scraping projects involving multiple pages and complex data extraction requirements.

Selenium

Selenium is a popular tool for automating web browsers. It supports various programming languages and can interact with web elements, execute JavaScript, and handle dynamic content.

• Key Features:

• Capable of interacting with web elements and executing JavaScript.

• Supports various browsers, including Chrome, Firefox, and Safari.

• Ideal for scraping dynamic content that requires user interactions.

• Best Use Case:

• Effective for scraping websites that rely heavily on JavaScript and require interaction with web elements.

2. Headless Browsers

Puppeteer

Puppeteer, a Node.js library offers a high-level API for monitoring headless Chrome or Chromium browsers. It is particularly useful for rendering JavaScript-heavy web pages, taking screenshots, and generating PDFs.

• Key Features:

• Headless browser with full support for modern web features.

• Capable of rendering JavaScript and interacting with web elements.

• Supports screenshot and PDF generation.

• Best Use Case:

• Excellent for scraping JavaScript-heavy content and performing complex interactions on web pages.

Playwright

Playwright is another Node.js library that provides a high-level API for controlling browsers. It supports multiple browsers, including Chrome, Firefox, and WebKit, and offers features for handling dynamic content and automating interactions.

• Key Features:

• Supports multiple browsers and platforms.

• Provides capabilities for handling dynamic content and user interactions.

• Offers built-in support for handling multiple pages and browser contexts.

• Best Use Case:

• Suitable for comprehensive scraping tasks involving multiple browsers and complex interactions.

3. Data Extraction Tools

Requests-HTML

Requests-HTML is a Python library that provides a simple API for making HTTP requests and extracting data from HTML content. It integrates with PyQuery for CSS selectors and supports JavaScript rendering through Pyppeteer.

• Key Features:

• Easy-to-use API for making HTTP requests and parsing HTML.

• Supports JavaScript rendering through integration with Pyppeteer.

• Provides features for handling cookies, sessions, and user agents.

• Best Use Case:

• It is ideal for scraping static and dynamic content with minimal setup and configuration.

Lxml

Lxml is a Python library that processes XML and HTML documents. It offers fast and efficient parsing and supports XPath and XSLT.

• Key Features:

• High-performance parsing and querying of XML and HTML documents.

• Supports XPath and XSLT for complex queries and transformations.

• Provides robust error handling and support for various document formats.

• Best Use Case:

• Effective for parsing and extracting data from structured HTML and XML documents.

4. API-Based Scraping

Requests

The Requests library is a simple and elegant HTTP library for Python. It is widely used to make HTTP requests and handle responses.

• Key Features:

• Simple and intuitive API for making HTTP requests.

• Supports various request methods, including GET, POST, PUT, and DELETE.

• Provides features for handling cookies, sessions, and authentication.

• Best Use Case:

• Ideal for interacting with APIs and handling HTTP requests and responses.

HTTPx

HTTPx is a fully featured HTTP client for Python 3. It supports asynchronous requests and is designed for modern web applications.

• Key Features:

• Supports asynchronous requests for improved performance.

• Provides advanced features for handling cookies, sessions, and authentication.

• Offers support for HTTP/1.1, HTTP/2, and WebSocket protocols.

• Best Use Case:

• Suitable for making asynchronous HTTP requests and interacting with modern web APIs.

Best Practices for OTT App Scraping

Following best practices ensures effective and ethical data collection when engaging in OTT app scraping . You can achieve reliable and responsible scraping results by adhering to guidelines like respecting robots.txt, handling anti-scraping measures, monitoring changes, and ensuring compliance.

Respect Robots.txt: Always review and comply with the OTT platform's robots.txt file. This file outlines the platform's scraping policies and restrictions, providing crucial information on which parts of the site are off-limits to automated access. Adhering to these guidelines for an OTT app data scraper helps avoid potential legal issues and ensures that your data collection practices align with the platform's rules.

Handle Anti-Scraping Measures: Many OTT platforms employ sophisticated anti-scraping measures to prevent unauthorized data extraction. As a streaming data scraper, it's essential to use techniques such as rotating proxies, managing request rates, and addressing CAPTCHAs to bypass these defenses effectively. Implementing these strategies helps maintain smooth and uninterrupted scraping operations while minimizing the risk of detection.

Monitor and Adapt: Continuously monitor the target OTT platform for any changes in its structure or content. Since websites frequently update layouts and technology, an effective OTT app data scraping service must be adaptable. Adjust your scraping strategy accordingly to ensure ongoing accuracy and efficiency in data collection.

Ensure Compliance: It is vital to conduct OTT app data scraping services that adhere to legal and ethical standards. Avoid scraping sensitive or personal information and always follow the platform's terms of service. By maintaining compliance, you safeguard your activities against legal repercussions and uphold ethical practices in data scraping.

Conclusion

Extracting data from OTT apps can provide valuable insights into content trends, user preferences, and competitive dynamics. You can effectively gather and analyze data from these platforms by leveraging the right tools and libraries. Whether you are dealing with static or dynamic content, handling JavaScript, or interacting with APIs, a wide range of tools are available to meet your scraping needs. By following best practices and respecting legal and ethical guidelines, you can ensure that your scraping activities are effective and compliant.

With advancements in web scraping technologies and the growing importance of data in the streaming industry, mastering these tools and techniques will enable you to stay ahead in the competitive landscape of OTT platforms.

Embrace the potential of OTT Scrape to unlock these insights and stay ahead in the competitive world of streaming!